Research Framework

Introduction

The National Center for Complementary and Integrative Health (NCCIH) provides support for research on a broad spectrum of complementary health approaches, with the goals of both understanding how these approaches work and developing scientific evidence to inform decisions made by the public, health care providers, and health policymakers.

Evidence from the scientific literature may provide a plausible rationale for research on many complementary interventions. However, investigators often lack critical information about biological effects of the intervention, outcome measures, logistical feasibility, or recruitment and retention strategies needed to design a rigorous and successful efficacy or effectiveness trial. In some cases, further feasibility testing is needed to address these and related issues prior to the design and initiation of a fully powered multisite clinical efficacy or effectiveness trial. In other cases, mechanistic or optimization trials can improve the understanding of how interventions work and provide an opportunity to enhance the effect size.

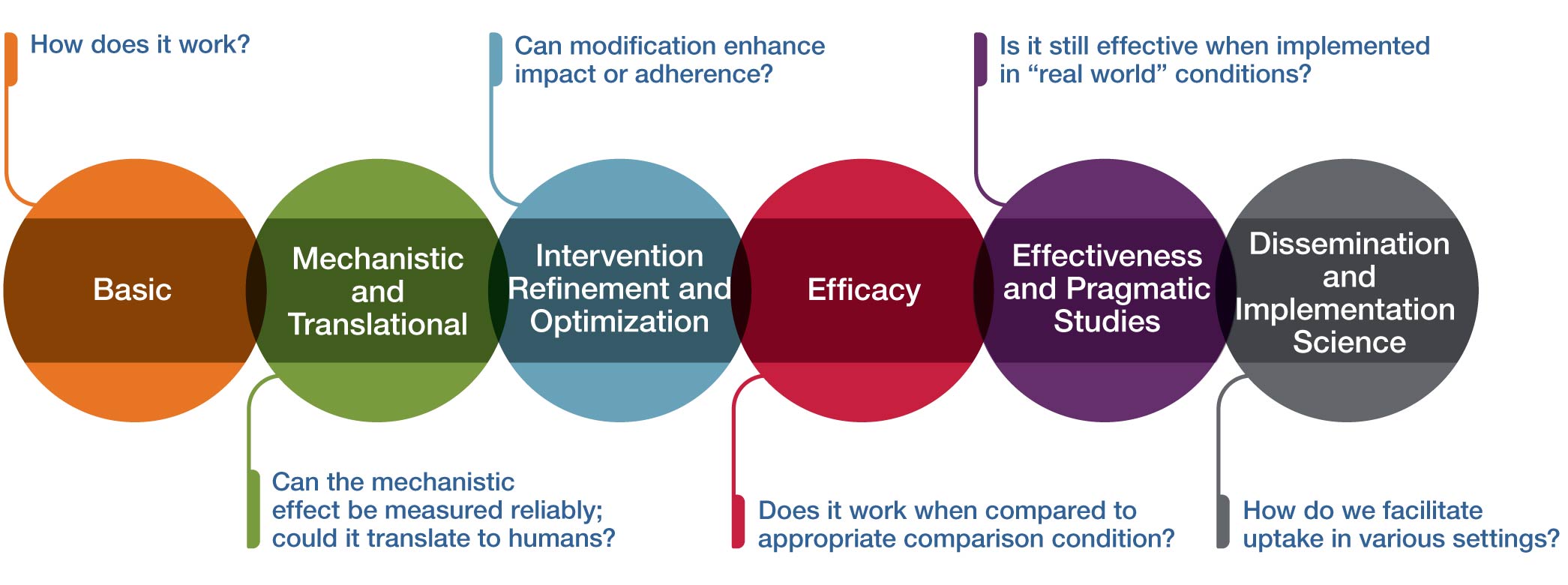

Multiphase paradigms for addressing these research needs have been very well described for pharmacologic interventions and successfully applied to the study of many dietary supplements, botanical medicines, and probiotics. The figure above provides an analogous conceptual framework for thinking about some of the unique challenges inherent in research on therapeutic inputs that include psychological and physical interventions (often called mind and body interventions). This framework can also be useful for technology-based intervention or device research.

The purpose of this framework is to:

- Provide a conceptual framework for NCCIH’s research on complementary and integrative health interventions

- Clearly delineate the purpose of each stage of research

- Help investigators align their research interests, questions, and approach with the appropriate stage of development

- Provide some guidance on the available or appropriate funding mechanism(s) for each stage of research

While this framework is presented in a linear fashion, investigation can be proposed at any stage along this continuum depending on extant data, the research environment and question, the clinical population, the investigative team, and other factors. Below, each stage (circle) of the conceptual framework is further described.

Basic

How does it work?

In the context of complementary and integrative health approaches, the initial stage in the conceptual framework is basic research. Basic research aims to investigate either the approach or intervention itself, the systems without the Intervention, or the associated technologies in either preclinical models or human subjects. Preclinical basic research aims to determine whether the intervention of interest can demonstrate a biologically and/or clinically meaningful and measurable effect when employed. Basic clinical research has the primary goal to facilitate the understanding of how the intervention works in humans.

Mechanistic and Translational

Can the mechanistic effect be measured reliably; could it translate to humans?

This stage of research is mechanistic and translational research. Mechanistic research often refers to studies examining the interactions between the approaches/interventions and their targeted systems (e.g., biological, behavioral, psychological, and/or social). Mechanistic research may be carried out in preclinical models or human subjects and aims to determine whether the intervention of interest can demonstrate a biologically and/or clinically meaningful and measurable effect when employed. Translational research aims to compare mechanistic results from animal or in vitro studies and use them to guide human studies to determine if the results will translate and generalize to the human population. Mechanistic and translational research has the goal of understanding how the intervention works in humans. The most rigorous approach used in mechanistic clinical trials utilizes a carefully controlled randomized trial to determine if the intervention has a specific effect on a biological measure or psychological process and whether the mechanistic impact can be reliably measured in humans (i.e., is the effect reproducible?).

Intervention Refinement and Optimization

Can modification enhance impact or adherence?

Rigorous clinical research requires an intervention that can be reliably replicated in multiple independent studies and optimized to increase adherence and potential impact. Hence, at this stage the intervention is more fully developed, refined, and/or standardized for research purposes. Optimization may involve determining the appropriate dose, frequency, or duration of the intervention; an optimal method of intervention delivery (in person, online, etc.); the appropriate participant population that is most responsive to the intervention; or the outcomes and measurement tools that are modifiable by the intervention. An intervention manual is developed and refined so the intervention can be delivered consistently to all research participants. Intervention refinement can be done in an iterative process with feasibility testing of the intervention along with feedback from participants. Key outcomes of feasibility testing include acceptability of the intervention protocol, adherence outcomes, and effectiveness of recruitment and retention methods. Interventions can be tailored to specific populations when necessary to enhance uptake and adherence to interventions. Multisite feasibility trials are often needed for mind and body interventions because of the need to demonstrate the ability to deliver the intervention with fidelity across sites and using several different interventionists.

Efficacy

Does it work when compared to an appropriate comparison condition?

This stage is the typical efficacy trial to test the clinical benefit of an intervention when delivered in a setting optimized to detect an effect and with high internal validity (highly trained providers, specific enrollment criteria, extensive follow-up, precise study protocol, etc.). NCCIH recommends that efficacy trials have a minimum of 90 percent power to detect clinically significant differences in outcome compared to a clinically relevant control group. The selection of the appropriate comparison group requires careful thought by the investigators. Each option for the comparison group slightly changes the research hypothesis of the trial and may have a profound impact on the number of participants needed. For example, a time and attention comparison with the same frequency and duration as the study intervention will provide a “control” intervention; thus, the hypothesis will assess whether the specific intervention has benefit beyond the attention paid to the participants. In contrast, an active comparison that is known to have benefit could be used to determine if the new study intervention is as good as or better than the active control. In this case, the trial has a noninferiority design and will usually require many more participants than a trial with a time and attention control. To enhance the generalizability of the efficacy trial, NCCIH requires trials with in-person study visits to be conducted across at least three geographically distinct sites (i.e., different regions of the United States). Efficacy trials that deliver the intervention and collect all data remotely can recruit nationally to obtain a representative patient population.

Effectiveness and Pragmatic Studies

Is it still effective when implemented in "real world" conditions?

This stage aims to determine effects of the intervention as it would be administered in a “real world” setting (as opposed to an optimized research setting), with a higher external validity. Effectiveness trials typically involve a broader and more heterogeneous range of intervention providers; generally have less restrictive enrollment criteria, resulting in greater heterogeneity in the participants; and typically allow a greater degree of flexibility, which mimics more closely the setting of real-world health care. This enhanced heterogeneity of participants and intervention delivery often results in a smaller effect size than was seen in the more precisely controlled efficacy trial, so power calculations should take this into account by providing 90 percent power in the trial for the primary outcome. Specific forms of effectiveness trials include comparative effectiveness, where two or more interventions are compared; and cost effectiveness research, which gathers additional data on the costs of the intervention and other relevant cost information. There are some scenarios in which the most relevant research question is best answered through an effectiveness study rather than an efficacy study; however, it is more common for an efficacy study to be conducted prior to effectiveness trials. Embedded pragmatic trials seek to evaluate whether an intervention is effective when embedded into clinical care in a health care setting. Before pragmatic trials are conducted in the health care setting, it is critically important that the intervention has already demonstrated efficacy in multisite trials or multiple single-site trials.

Dissemination and Implementation Science

How do we facilitate uptake in various settings?

The final stage of the framework is to take those interventions that have been found to be efficacious in real-world settings and determine how to best disseminate and implement interventions into clinical care or other appropriate settings. Dissemination research is the scientific study of targeted distribution of information and intervention materials to a specific public health or clinical practice audience. The intent is to understand how best to spread and sustain knowledge and the associated evidence-based interventions. Implementation science research is the scientific study of the strategies used to adopt and integrate evidence-based health interventions into clinical and community settings to improve patient outcomes and benefit population health. In some cases, very strong efficacy data for an intervention may justify hybrid effectiveness–implementation studies. For these studies, the aims of determining effectiveness of the intervention and simultaneously testing implementation strategies are equally important or cannot be reasonably split apart.